Not many people get excited about “parking” or “data quality,” but ensuring INRIX Parking is the highest quality solution for drivers has been one of the most enjoyable projects I’ve worked on as a Data Scientist at INRIX.

Quality is at the heart of every product we build. We are passionate about quality, as evident by our ISO9001:2015 and 3rd party Automotive SPICE® certification.

In the first post of this four part blog series examining parking data quality, I will outline our key criteria to evaluate parking data quality.

Key Criteria For Measuring Parking Data Quality Success:

What factors does INRIX take into account to measure parking data quality?

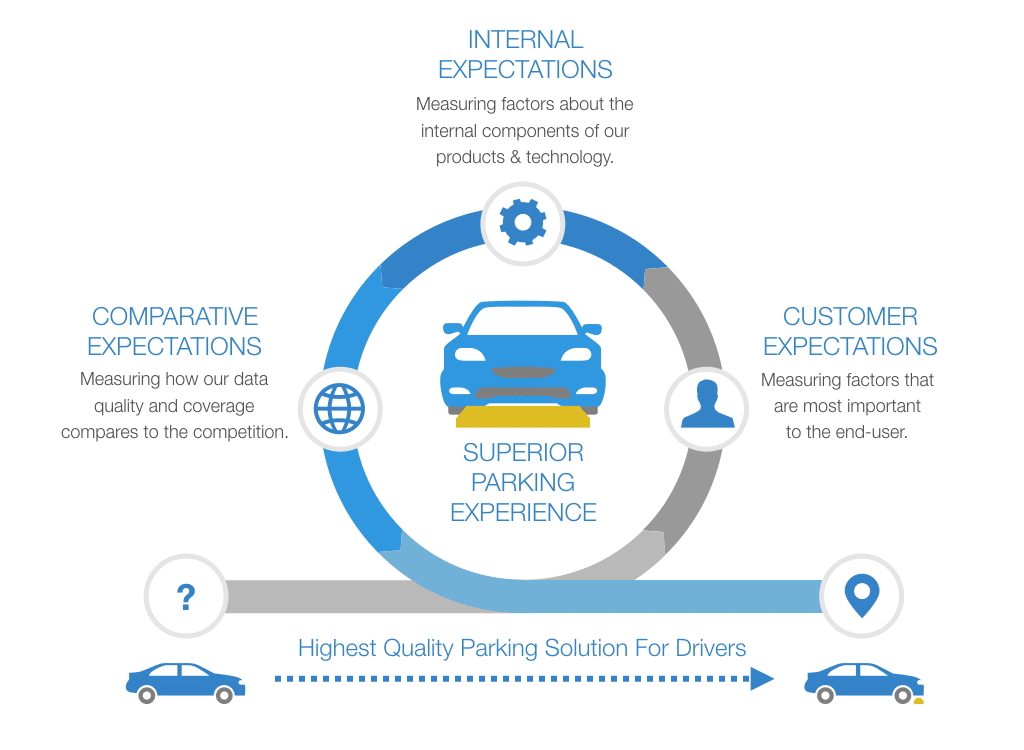

When we consider how to measure the quality of a new product, we consider the problem from three points of view: 1) Customer Expectations, 2) Internal Expectations, and 3) Comparative Expectations

1) CUSTOMER EXPECTATION FACTORS:

What measures can we create that directly speak to what is important to the end user?

A few examples of measuring parking data quality directly tied to customer expectations include:

- Static information – rates and hours of operation for lot and street parking

- Dynamic information – current occupancy levels

- Entrance point information – used to navigate drivers to the parking location

- Data freshness – with over 212,000 lots in our database, it’s important the data is up-to-date and tested

To ease parking pain, we are continuously striving to have the most comprehensive parking solution in cities and destinations around the world where drivers need this information the most. This expansion includes adding more off-street, on-street and, most important, dynamic parking availability information.

2) INTERNAL EXPECTATION FACTORS:

What measures can we create that assess the internal components of the product?

This is measuring directly against internal expectation, and it’s important because the more fundamental the data is, the greater the importance that it is right. To put it another way, garbage in, garbage out, a principle that was obvious even to the inventor of the first programmable computing device. Imagine how difficult it would be to calculate the correct parking cost for a driver if the rate card is incorrectly stored or translated. INRIX created metrics to address this important component of the product, even though the data is not directly visible to the end customer.

3) COMPARATIVE EXPECTATION FACTORS:

What measures can we create to compare our quality to competitors?

In many ways the comparative expectation measurement is the least important of the three because INRIX always builds products to delight customers, not to win head-to-head competitions. However, it’s important for automakers to compare “apples to apples” when evaluating parking providers. With that in mind, INRIX benchmarks competitive products to help with side by side comparisons.

Comparing our data quality and coverage to the competition is a pretty basic metric – how many parking lots do we have data for in major cities and how does our accuracy compare? The INRIX internal quality bar is straightforward but when evaluating quality compared to other providers, we have only indirect ways of gauging that we have reached that goal. It’s like a “known unknown.” If we knew how many lots there were, we’d have them all already. This is a case where it makes sense to compare our quality to the competition to identify and update any gaps.

Our next post will look into the measures that speak to what is important to the end user

In the next posts of this series we will look at each of these factors in more detail, and discuss the complexity of developing metrics for on-street parking, as well as the kind of factors that go into building a set of off-street metrics.